SuperOptiX Now Supports OpenAI's GPT-OSS Models!

Superagentic AI excited to announce that SuperOptiX now supports OpenAI's latest open-source language models: GPT-OSS-20B and GPT-OSS-120B!

📖 Read detailed version of this blog on your favorite platform

Choose your preferred platform to dive deeper

What are GPT-OSS Models?

OpenAI has released two powerful open-weight language models designed for advanced reasoning and complex tasks. The GPT-OSS-120B model contains 117B parameters with 5.1B active parameters, making it suitable for production environments and high-reasoning use cases. The GPT-OSS-20B model contains 21B parameters with 3.6B active parameters, optimized for lower latency and specialized use cases.

Key Features

These models come with several advanced capabilities:

Permissive Apache 2.0 License

Build freely without copyleft restrictions or patent risk. This makes these models ideal for experimentation, customization, and commercial deployment.

Configurable Reasoning Effort

Adjust reasoning effort (low, medium, high) based on your specific use case and latency needs. This flexibility allows you to optimize performance based on your application requirements.

Full Chain-of-Thought

Gain complete access to the model's reasoning process, facilitating easier debugging and increased trust in outputs. This feature is particularly valuable for developers who need to understand how the model arrives at its conclusions.

Fine-tunable

Fully customize models to your specific use case through parameter fine-tuning. The larger GPT-OSS-120B model can be fine-tuned on a single H100 node, while the smaller GPT-OSS-20B model can even be fine-tuned on consumer hardware.

Agentic Capabilities

Use the models' native capabilities for function calling, web browsing, Python code execution, and Structured Outputs. These capabilities enable the models to interact with external systems and perform complex multi-step tasks.

Native MXFP4 Quantization

The models are trained with native MXFP4 precision for the MoE layer, making GPT-OSS-120B run on a single H100 GPU and GPT-OSS-20B fit within 16GB of memory.

SuperOptiX Integration

SuperOptiX, the comprehensive AI agent development framework built on DSPy, now provides seamless integration with GPT-OSS models. While SuperOptiX currently supports basic model management and inference, we're actively working on implementing the advanced features mentioned above. These capabilities will be available in future releases.

How to Use GPT-OSS with SuperOptiX

You can install GPT-OSS models via Ollama, MLX, or HuggingFace.

Recommended: Use Ollama for GPT-OSS Models

For the best performance and reliability with GPT-OSS models, we recommend using Ollama:

- Best Performance: 19.7 t/s vs 5.2 t/s (MLX) vs N/A (HuggingFace)

- Cross-Platform: Works on all platforms (Windows, macOS, Linux)

- Easy Setup: Simple installation and model management

- Optimized Format: GGUF format optimized for local inference

- No Server Required: Direct model execution

Warning for Apple Silicon Users

GPT-OSS models (openai/gpt-oss-20b, openai/gpt-oss-120b) cannot run on Apple Silicon via HuggingFace backend due to mixed precision conflicts between F16 and BF16 data types that Apple's Metal Performance Shaders doesn't support.

Use these alternatives for full Apple Silicon compatibility:

- Best option: Ollama (cross-platform, best performance)

- Apple Silicon native: MLX (requires server)

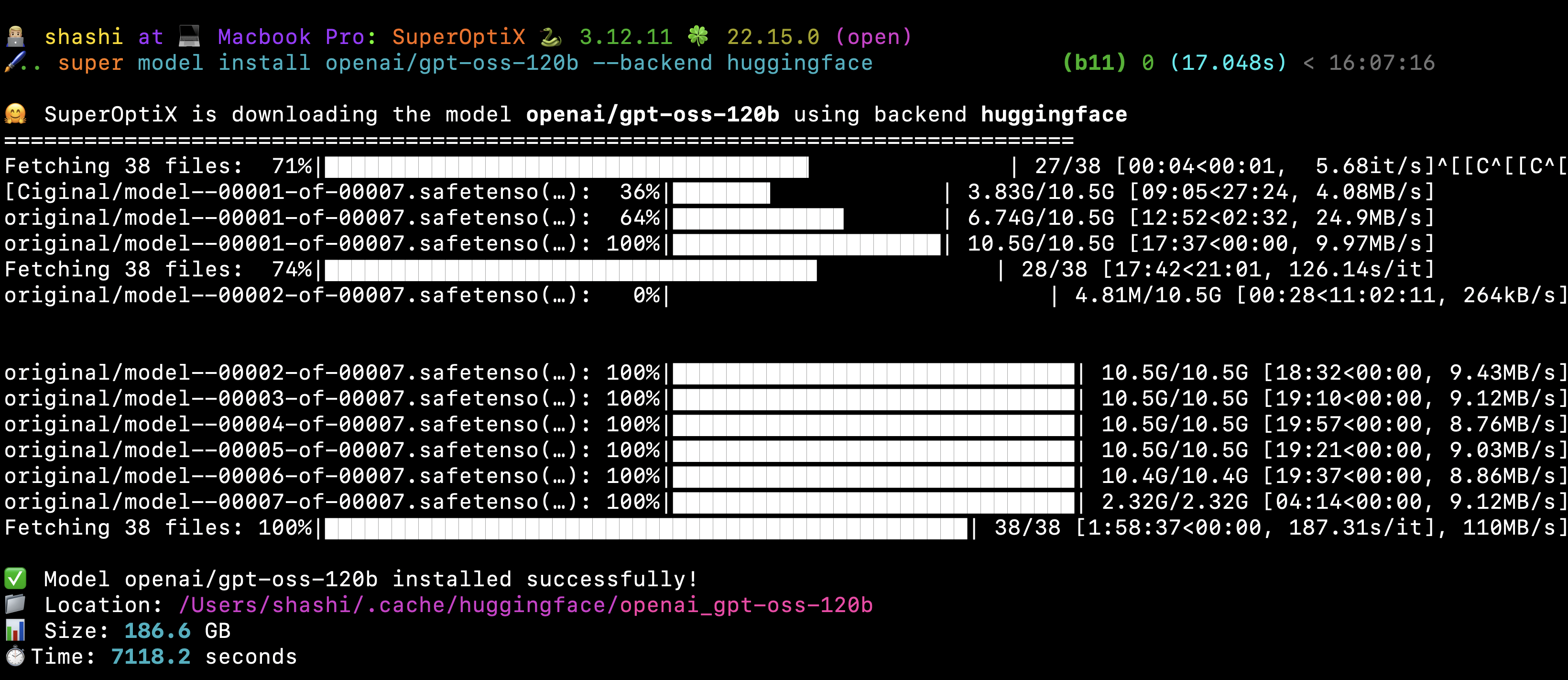

Note: Downloading these models may take a significant amount of time. The GPT-OSS-120B model is approximately 120GB, while the GPT-OSS-20B model is around 40GB. Make sure you have sufficient storage space and a stable internet connection.

Quick Start with Ollama (RECOMMENDED)

You need to update Ollama to get the latest GPT-OSS models.

Using MLX Backend (Apple Silicon)

For Apple Silicon users who prefer native MLX performance:

Using HuggingFace Backend

You need to install the HuggingFace dependencies: pip install superoptix[huggingface]. Then you should be able to download the models directly from HuggingFace.

Configuring Playbooks with GPT-OSS Models

Once you have installed the GPT-OSS models, you can configure your SuperOptiX playbooks to use them. Here are examples of how to set up different types of agents:

Basic Playbook Configuration

To use GPT-OSS models in your SuperOptiX agents, you need to configure the language_model section in your playbook YAML file:

Ollama Backend (Cross-platform - RECOMMENDED):

language_model: provider: ollama model: gpt-oss:20b # or gpt-oss:120b api_base: http://localhost:11434 temperature: 0.7 max_tokens: 2048

MLX Backend (Apple Silicon - Native Support):

language_model: provider: mlx model: lmstudio-community/gpt-oss-20b-MLX-8bit api_base: http://localhost:8000 temperature: 0.7 max_tokens: 2048

HuggingFace Backend (Limited on Apple Silicon):

language_model: provider: huggingface model: openai/gpt-oss-20b api_base: http://localhost:8001 temperature: 0.7 max_tokens: 2048

This configuration tells SuperOptiX to use the specified GPT-OSS model for your agent's language processing tasks. The temperature parameter controls the creativity of the responses, while max_tokens limits the length of generated text.

Performance Comparison

| Backend | Model | Status | Performance | Notes |

|---|---|---|---|---|

| Ollama | gpt-oss:20b | ✅ Works | Optimized format | RECOMMENDED |

| MLX-LM | lmstudio-community/gpt-oss-20b-MLX-8bit | ✅ Works | Native support | Apple Silicon only |

| HuggingFace | openai/gpt-oss-20b | ❌ Broken | N/A | Mixed precision errors - Avoid on Apple Silicon |

Check model status: super model list | grep gpt-oss

This process verifies that your GPT-OSS model is properly configured and accessible to your SuperOptiX agent.

Hardware Requirements

GPT-OSS-20B requires approximately 16GB of RAM and is well-suited for local development and testing. GPT-OSS-120B requires an H100 GPU but I managed to fit this in M4 Max 128GB, is designed for production environments that demand the highest level of reasoning capabilities.

Getting Started

- Install SuperOptiX:

pip install superoptixYou can add MLX and Hugging Face as needed. - Install GPT-OSS models:

super model install gpt-oss:20b - Test the model:

super model run gpt-oss:20b "Hello, how are you?" - Create your first agent:

super init my-gpt-oss-project

Future Enhancements

SuperOptiX is actively working on implementing advanced GPT-OSS features including configurable reasoning levels, chain-of-thought integration, agentic capabilities, and fine-tuning support. These capabilities will be available in future releases.

MLX Support

MLX MoE Support

Advanced Tooling

Enhanced function calling and web browsing

Performance Monitoring

Detailed analytics and optimization

Community Models

Support for fine-tuned GPT-OSS variants